🍀 𝐍𝐞𝐰 𝐘𝐞𝐚𝐫. 𝐍𝐞𝐱𝐭 𝐋𝐞𝐯𝐞𝐥 𝐟𝐨𝐫 𝐊𝐚𝐭𝐚𝐧𝐚 𝐋𝐚𝐛𝐬.

As we enter the new year, Katana Labs moves into its 𝐧𝐞𝐱𝐭 𝐜𝐨𝐦𝐦𝐞𝐫𝐜𝐢𝐚𝐥 𝐜𝐡𝐚𝐩𝐭𝐞𝐫.

After two years of focused product development, successful market entry, team growth, and serving our first customers, we are ready to scale – transitioning from pilot engagements to full commercial growth.

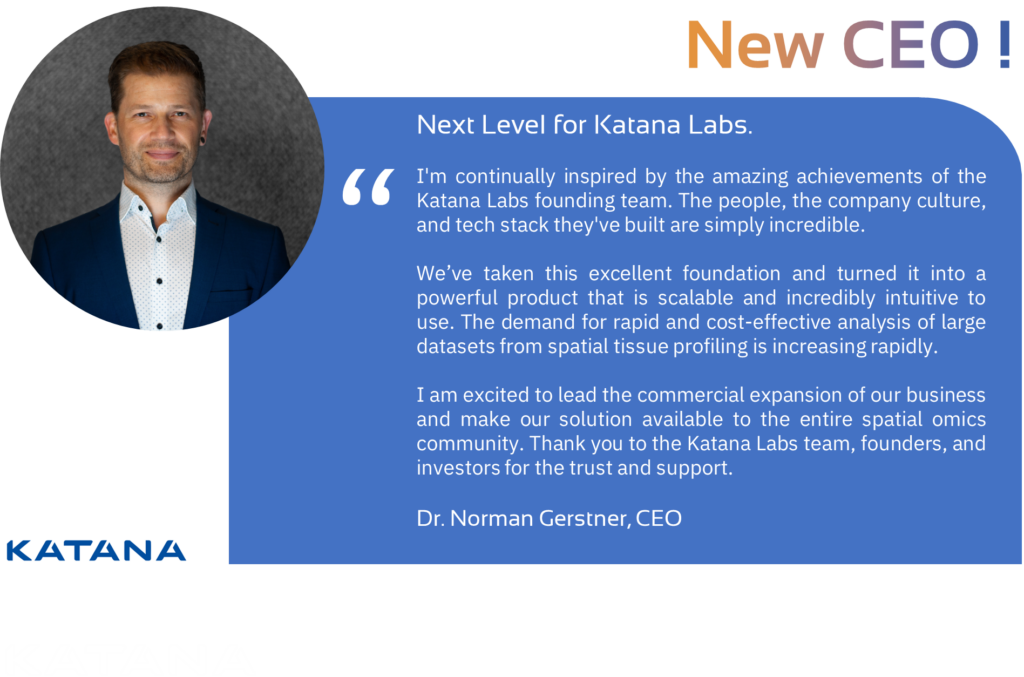

🚀 To lead this next phase, we are pleased to announce that Dr. Norman Gerstner will assume the role of 𝐂𝐄𝐎 𝐚𝐬 𝐨𝐟 𝐉𝐚𝐧𝐮𝐚𝐫𝐲 𝟐𝟎𝟐𝟔. Norman joined Katana Labs in 2025 to spearhead our marketing and sales efforts during the market entry phase. With nearly two decades of experience across DeepTech, life sciences, and precision oncology, he brings a 𝐬𝐭𝐫𝐨𝐧𝐠 𝐭𝐫𝐚𝐜𝐤 𝐫𝐞𝐜𝐨𝐫𝐝 in commercial execution and strategic leadership.

The whole Katana Labs team is deeply grateful to Falk Zakrzewski, Nicolaus Widera and Walter de Back for building the strong technological and cultural foundation we stand on today.

Our founders and investors are confident that Katana Labs will unlock its full potential under Norman’s leadership as we scale our platform, expand our customer footprint and establish the company as an 𝐢𝐧𝐭𝐞𝐫𝐧𝐚𝐭𝐢𝐨𝐧𝐚𝐥 𝐩𝐥𝐚𝐲𝐞𝐫 𝐢𝐧 𝐭𝐡𝐞 𝐓𝐞𝐜𝐡𝐁𝐢𝐨 𝐬𝐞𝐜𝐭𝐨𝐫.

𝐒𝐩𝐚𝐭𝐢𝐚𝐥 𝐭𝐢𝐬𝐬𝐮𝐞 𝐩𝐫𝐨𝐟𝐢𝐥𝐢𝐧𝐠 is rapidly reshaping the precision medicine value chain – from translational research and clinical development to improving clinical trial outcomes.

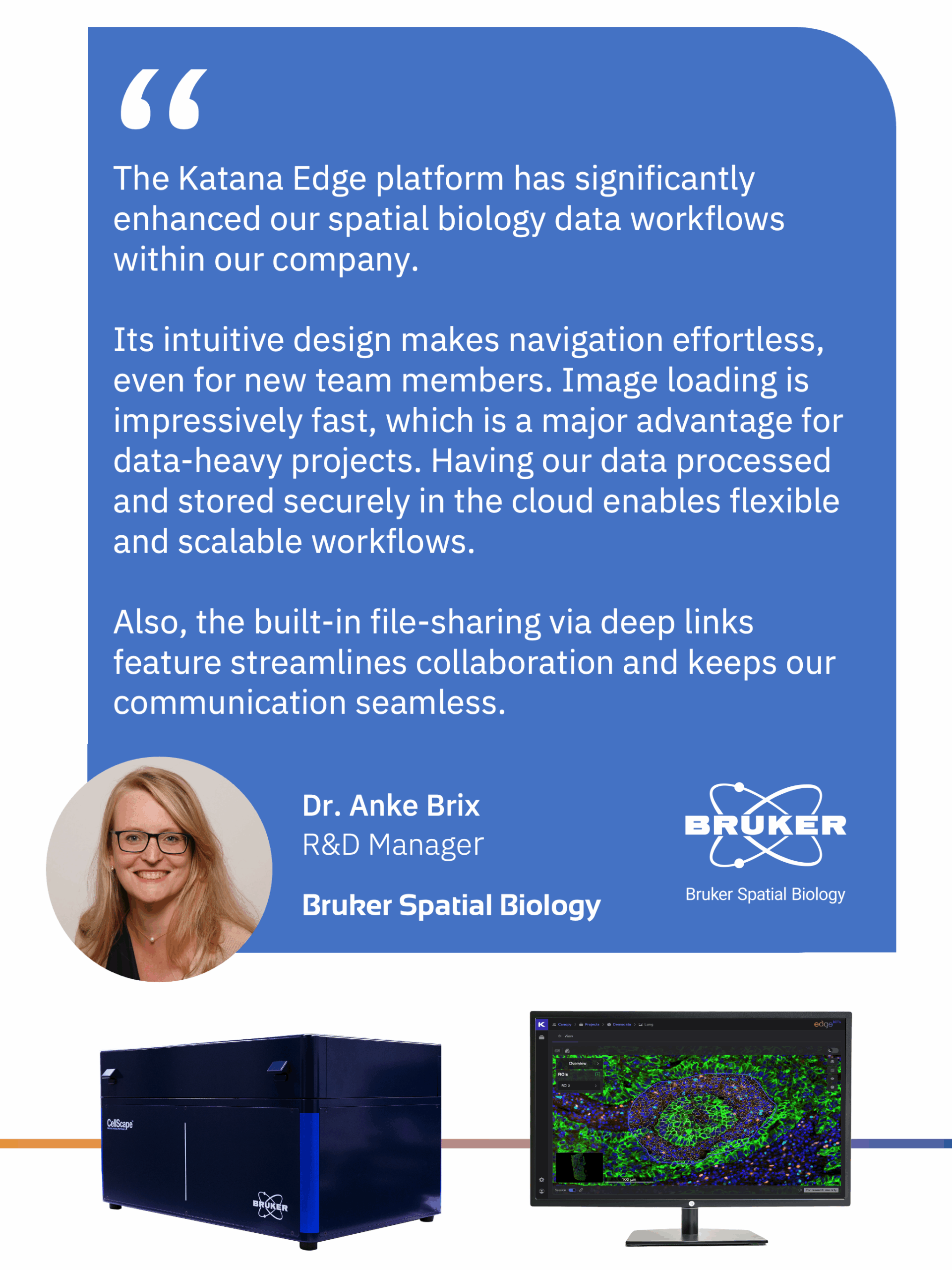

At Katana Labs, we build the data infrastructure and AI/ML tools that make deeply embedded, actionable insights in biological tissue samples accessible across biological scales and at enterprise level. Alongside our B2B enterprise SaaS platform 𝐊𝐚𝐭𝐚𝐧𝐚 𝐄𝐝𝐠𝐞, we provide expert services in image analysis and data analytics for spatial biology and spatial multi-omics.

✨ Founders, investors, and the entire team are excited to enter this 𝐧𝐞𝐱𝐭 𝐠𝐫𝐨𝐰𝐭𝐡 𝐩𝐡𝐚𝐬𝐞 𝐨𝐟 𝐊𝐚𝐭𝐚𝐧𝐚 𝐋𝐚𝐛𝐬.